For You: The worst things that have ever happened to everyone else.

TikTok’s all-powerful, all-knowing algorithm appears to have decided that I want to see some of the most depressing and disturbing content the platform has to offer. My timeline has become an endless doomscroll. Despite TikTok’s claims that its mission is to “bring joy,” I am not getting much joy at all.

What I am getting is a glimpse at just how aggressive TikTok is when it comes to deciding what content it thinks users want to see and pushing it on them. It’s a bummer for me, but potentially harmful to users whose timelines become filled with triggering or extremist content or misinformation. This is a problem with pretty much every social media platform as well as YouTube. But with TikTok, it feels even worse. The platform’s algorithm-centric design sucks users into that content in ways its rivals simply don’t. And those users tend to skew younger and spend more time on TikTok than they do anywhere else.

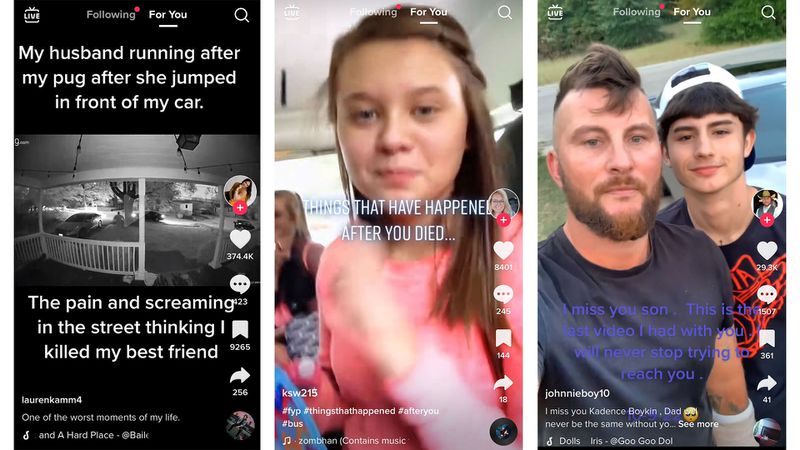

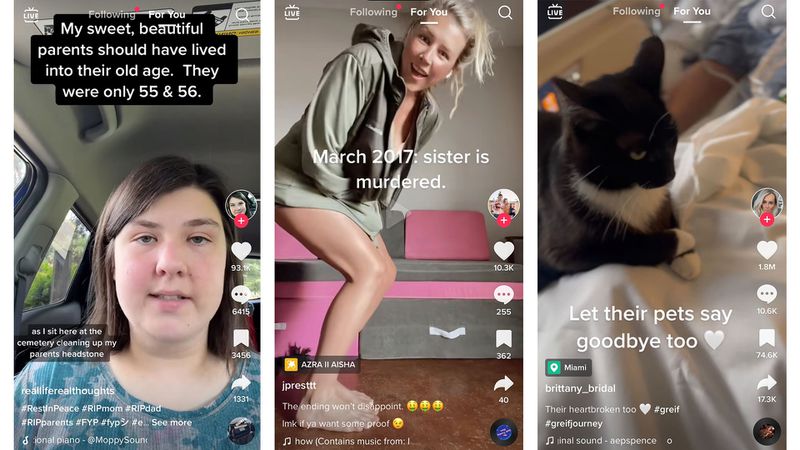

To give you a sense of what I’m working with here, my For You page — that’s TikTok’s front door, a personalized stream of videos based on what its algorithm thinks you’ll like — is full of people’s stories about the worst thing that has ever happened to them. Sometimes they talk to the camera themselves, sometimes they rely on text overlays to tell the story for them while they dance, sometimes it’s photos or videos of them or a loved one injured and in the hospital, and sometimes it’s footage from Ring cameras that show people accidentally running over their own dog. Dead parents, dead children, dead pets, domestic violence, sexual assault, suicides, murders, electrocutions, illnesses, overdoses — if it’s terrible and someone has a personal story to tell about it, it’s probably in my For You feed. I have somehow fallen into a rabbit hole, and it is full of rabbits that died before their time.

The videos often have that distinctive TikTok style that adds a layer of surrealness to the whole thing, often with the latest music meme. Videos are edited so that Bailey Zimmerman sings “that’s when I lost it” at the exact moment a woman reacts to finding out her mother is dead. Tears run down flawless, radiant, beauty-filtered cheeks. Liberal use of TikTok’s text-to-speech feature means a cheerful robot-y woman’s voice might be narrating the action. “Algospeak” — code words meant to get around TikTok’s moderation of certain topics or keywords — tells us that a boyfriend “unalived” himself or that a father “$eggsually a[B emoji]used” his daughter.

Oh, I also get a lot of ads for mental health services, which makes sense considering the kind of person TikTok seems to think I am.

TikTok is designed to suck you in and keep you there, starting with its For You page. The app opens automatically to it, and the videos autoplay. There’s no way to open to the feed of accounts you follow or to disable the autoplay. You have to opt out of watching what TikTok wants you to see.

“The algorithm is taking advantage of a vulnerability of the human psyche, which is curiosity,” Emily Dreyfuss, a journalist at the Harvard Kennedy School’s Shorenstein Center and co-author of the book Meme Wars, told me.

Watchtime is believed to be a major factor when it comes to what TikTok decides to show you more of. When you watch one of the videos it sends you, TikTok assumes you’re curious enough about the subject to watch similar content and feeds it to you. It’s not about what you want to see, it’s about what you’ll watch. Those aren’t always the same thing, but as long as it keeps you on the app, that doesn’t really matter.

That ability to figure out who its users are and then target content to them based on those assumptions is a major part of TikTok’s appeal. The algorithm knows you better than you know yourself, some say. One reporter credited TikTok’s algorithm with knowing she was bisexual before she did, and she’s not the only person to do so. I thought I didn’t like what TikTok was showing me, but I had to wonder if perhaps the algorithm picked up on something in my subconscious I didn’t know was there, something that really wants to observe other people’s misery. I don’t think this is true, but I am a journalist, so ... maybe?

I’m not the only TikTok user who is concerned about what TikTok’s algorithm thinks of them. According to a recent study of TikTok users and their relationship with the platform’s algorithm, most TikTok users are very aware that the algorithm exists and the significant role it plays in their experience on the platform. Some try to create a certain version of themselves for it, what the study’s authors call an “algorithmized self.” It’s like how, on other social media sites, people try to present themselves in a certain way to the people who follow them. It’s just that on TikTok, they’re doing it for the algorithm.

Aparajita Bhandari, the study’s co-author, told me that many of the users she spoke to would like or comment on certain videos in order to tell the algorithm that they were interested in them and get more of the same.

“They had these interesting theories about how they thought the algorithm worked and how they could influence it,” Bhandari said. “There’s this feeling that it’s like you’re interacting with yourself.”

In fairness to TikTok and my algorithmized self, I haven’t given the platform much to go on. My account is private, I have no followers, and I only follow a handful of accounts. I don’t like or comment on videos, and I don’t post my own. I have no idea how or why TikTok decided I wanted to spectate other people’s tragedies, but I’ve definitely told it that I will continue to do so because I’ve watched several of them. They’re right there, after all, and I’m not above rubbernecking. I guess I rubbernecked too much.

I’ll also say that there are valid reasons why some of this content is being uploaded and shared. In some of these videos, the intent is clearly to spread awareness and help others, or to share their story with a community they hope will be understanding and supportive. And some people just want to meme tragedy because I guess we all heal in our own way.

This made me wonder what this algorithm-centric platform is doing to people who may be harmed by falling down the rabbit holes their For You pages all but force them down. I’m talking about teens seeing eating disorder-related content, which the Wall Street Journal recently reported on. Or extremist videos, which aren’t all that difficult to find and which we know can play a part in radicalizing viewers on platforms that are less addictive than TikTok. Or misinformation about Covid-19 vaccines.

“The actual design choices of TikTok make it exceptionally intimate,” Dreyfuss said. “People say they open TikTok, and they don’t know what happens in their brain. And then they realize that they’ve been looking at TikTok for two hours.”

TikTok is quickly becoming the app people turn to for more than just entertainment. Gen Z users are apparently using it as a search engine — though the accuracy of the results seems to be an open question. They’re also using it as a news source, which is potentially problematic for the same reason. TikTok wasn’t built to be fact-checked, and its design doesn’t lend itself to adding context or accuracy to its users’ uploads. You don’t even get context as simple as the date the video was posted. You’re often left to try to find additional information in the video’s comments, which also have no duty to be true.

TikTok now says it’s testing ways to ensure that people’s For You pages have more diversified content. I recently got a prompt after a video about someone’s mother’s death from gastric bypass surgery asking how I “felt” about what I just saw, which seems to be an opportunity to tell the platform that I don’t want to see any more stuff like it. TikTok also has rules about sensitive content. Subjects like suicide and eating disorders can be shared as long as they don’t glamorize them, and content that features violent extremism, for instance, is banned. There are also moderators hired to keep the really awful stuff from surfacing, sometimes at the expense of their own mental health.

There are a few things I can do to make my For You page more palatable to me. But they require far more effort than it took to get the content I’m trying to avoid in the first place. Tapping a video’s share button and then “not interested” is supposed to help, though I haven’t noticed much of a change after doing this many times. I can look for topics I am interested in and watch and engage with those videos or follow their creators, the way the people in Bhandari’s study do. I also uploaded a few videos to my account. That seems to have made a difference. My videos all feature my dog, and I soon began seeing dog-related videos in my feed.

This being my feed, though, many of them were tragic, like a dying dachshund’s last photoshoot and a warning not to let your dogs eat corn cobs with a video of a man crying and kissing his dog as she prepares for a second surgery to remove the corn cob he fed her. Maybe, over time, the happy dog videos I’m starting to see creep onto my For You page will outnumber the sad ones. I just have to keep watching.

This story was first published in the Recode newsletter. Sign up here so you don’t miss the next one!

------------Read More

By: Sara Morrison

Title: TikTok won’t stop serving me horror and death

Sourced From: www.vox.com/recode/2022/10/26/23423257/tiktok-for-you-page-algorithm

Published Date: Wed, 26 Oct 2022 15:00:00 +0000

Did you miss our previous article...

https://trendinginbusiness.business/politcal/what-wins-look-like-dobbs-and-the-antiabortion-movement

.png)