The EU has taken a step closer to enforcing strong regulation of AI, drafting new safeguards that would prohibit a wide range of dangerous use cases.

These include prohibitions on mass facial recognition programs in public places and predictive policing algorithms that try to identify future offenders using personal data. The regulation also requires the creation of a public database of “high-risk” AI systems deployed by public and government authorities so that EU citizens can be informed about when and how they are being affected by this technology.

The law in question is a new draft of the EU’s AI Act, which was approved today by two key committees: the Internal Market Committee and the Civil Liberties Committee. These committees are comprised of MEPs (members of the European Parliament) who have been charged with overseeing the legislation’s development. They approved the finished draft with 84 votes in favor, seven against, and 12 abstentions.

The AI Act itself is a sprawling document that’s been in the works for years, with the explosion of interest in generative AI tools this year forcing a number of significant and last-minute changes. This extra attention, though, seems to have focused lawmakers on the potential dangers of this fast-moving technology. Campaigners say the version of the act approved this morning — which still faces possible changes — is extremely welcome.

“It’s overwhelmingly good news,” Daniel Leufer, a senior policy analyst at nonprofit Access Now, told The Verge. “I think there’s been huge positive changes made to the text.”

“[It’s] globally significant,” Sarah Chander, senior policy advisor at digital advocacy group European Digital Rights, told The Verge. “Never has the democratic arm of a regional bloc like the EU made such a significant step on prohibiting tech uses from a human rights perspective.”

As Chander suggests, it’s likely these laws will affect countries around the world. The EU is such a significant market that tech companies often comply with EU-specific regulation on a global scale in order to reduce the friction of maintaining multiple sets of standards. Information demanded by the EU on AI systems will also be available globally, potentially benefitting users in the US, UK, and elsewhere.

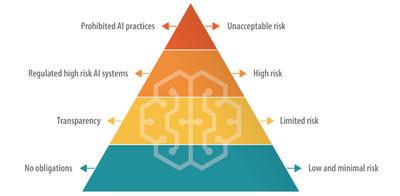

Image: European Commission

The main changes to the act approved today are a series of bans on what the European Parliament describes as “intrusive and discriminatory uses of AI systems.” As per the Parliament, the prohibitions — expanded from an original list of four — affect the following use cases:

- “Real-time” remote biometric identification systems in publicly accessible spaces;

- “Post” remote biometric identification systems, with the only exception of law enforcement for the prosecution of serious crimes and only after judicial authorization;

- Biometric categorisation systems using sensitive characteristics (e.g. gender, race, ethnicity, citizenship status, religion, political orientation);

- Predictive policing systems (based on profiling, location or past criminal behaviour);

- Emotion recognition systems in law enforcement, border management, workplace, and educational institutions; and

- Indiscriminate scraping of biometric data from social media or CCTV footage to create facial recognition databases (violating human rights and right to privacy).

In addition to the prohibitions, the updated draft introduces new measures aimed at controlling so-called “general purpose AI” or “foundational” AI systems — large-scale AI models that can be put to a range of uses. This is a poorly defined category even within the EU’s legislation but is intended to apply to resource-intensive AI systems built by tech giants like Microsoft, Google, and OpenAI. These include AI language models like GPT-4 and ChatGPT as well as AI image generators like Stable Diffusion, Midjourney, and DALL-E.

Under the proposed legislation, the creators of these systems will have new obligations to assess and mitigate various risks before these tools are made available. These include assessing the environmental damage of training these systems, which are often energy-intensive, and forcing companies to disclose “the use of training data protected under copyright law.”

The last clause could have a significant effect in the US, where copyright holders have launched a number of lawsuits against the creators of AI image generators for using their data without consent. Many tech companies like Google and OpenAI have avoided these sorts of legal challenges by simply refusing to disclose what data they train their systems on (usually claiming this information is a trade secret). If the EU forces companies to disclose training data, it could open up lawsuits in the US and elsewhere.

Another key provision in the draft AI Act is the creation of a database of general-purpose and high-risk AI systems to explain where, when, and how they’re being deployed in the EU.

“This database should be freely and publicly accessible, easily understandable, and machine-readable,” says the draft. “The database should also be user-friendly and easily navigable, with search functionalities at minimum allowing the general public to search the database for specific high-risk systems, locations, categories of risk [and] keywords.”

The creation of such a database has been a long-standing demand of digital rights campaigners, who say that the public is often unwittingly experimented on in mass surveillance systems and their data collected without consent to train AI tools.

“One of the big problems is that we don’t know what [AI systems] are being used until harm happens,” says Leufer. He gives the example of the Dutch benefits scandal, in which an algorithm used by the government falsely accused thousands of unpaid tax bills. The database, he says, will help EU citizens arm themselves with information and make it easier for individuals and groups “to use existing laws to their full effect.”

Leufer notes that this purpose is somewhat undermined by the fact that the database is only mandatory for systems deployed by public and government authorities (it’s only voluntary in the private domain) but that its creation is still a step forward for transparency.

However, the AI Act is still subject to change. After its approval today by EU parliamentary committees, it will face a plenary vote next month before going into trilogues — a series of closed-door negotiations involving EU member states and the bloc’s controlling bodies.

Chander says that some of the prohibitions most prized by campaigners — including biometric surveillance and predictive policing — will cause “a major fight” at the trilogues. “Member states have argued that these uses of AI are necessary to fight crime and maintain security,” says Chander, noting that, at the same time, “rights experts have argued that surveillance does not equal safety, and in fact these uses of AI ... redirect policing and surveillance into already marginalized and over-policed communities.”

Following trilogues, the AI Act will need to be approved before spring 2024 — a tight turnaround for such a huge piece of legislation.

------------Read More

By: James Vincent

Title: EU draft legislation will ban AI for mass biometric surveillance and predictive policing

Sourced From: www.theverge.com/2023/5/11/23719694/eu-ai-act-draft-approved-prohibitions-surveillance-predictive-policing

Published Date: Thu, 11 May 2023 16:19:16 +0000

.png)