Who’s using AI?

AI is suddenly everywhere. Image generators and large language models are at the core of new startups, powering features inside our favorite apps, and — perhaps more importantly — driving conversation not just in the tech world but also society at large. Concerns abound about cheating in schools with ChatGPT, being fooled by AI-generated pictures, and artists being ripped off or even outright replaced.

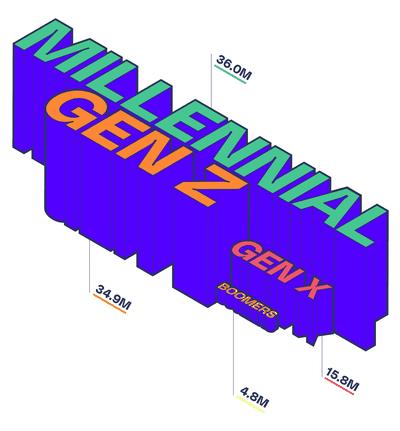

But despite widespread news coverage, use of these new tools is still fairly limited, at least when it comes to dedicated AI products. And experience with these tools skews decidedly toward younger users.

Most people have heard of ChatGPT. Bing and Bard? Not quite.

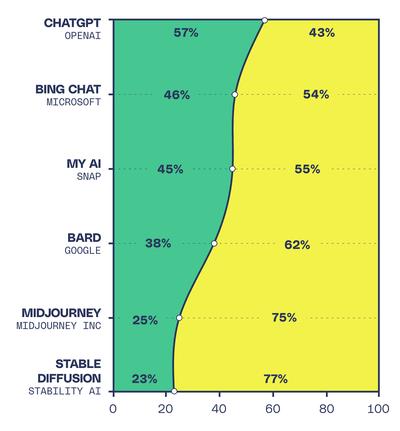

Only 1 in 3 people have tried one of these AI-powered tools, and most aren’t familiar with the companies and startups that make them. Despite the many insurgents in the world of AI, like Stability AI and Midjourney, it’s still the work of Big Tech that substantially steers the conversation. OpenAI is the major exception — but arguably, thanks to its market cap and deals with Microsoft, it is itself now a member of the corpo-club.

AI use is dominated by Millennials and Gen Z

One complicating factor, though, is that the definition of an AI tool is extremely fuzzy. We asked respondents about dedicated AI services like ChatGPT or Midjourney. But many companies are adding AI features to established software, whether that’s image generation in Photoshop or text suggestion in Gmail and Google Docs. And as the joke goes, AI is whatever computers haven’t done yet, meaning yesterday’s AI is, simply, today’s expected features.

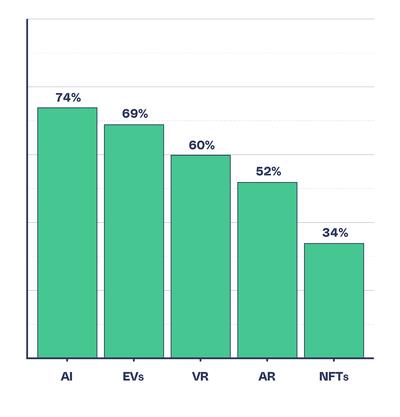

Despite the limited usage of these tools so far, people have high expectations for AI’s impact on the world — beyond those of other emergent (and sometimes controversial) technologies. Nearly three-quarters of people said AI will have a large or moderate impact on society. That’s compared to 69 percent for electric vehicles and a paltry 34 percent for NFTs. They’re so 2021.

Will these technologies have a big impact on society?

How is AI being used?

The main fuel for the recent boom is generative AI: systems that can generate text, help brainstorm ideas, edit your writing, and create pictures, audio, and video. These tools are being quickly integrated into professional systems — Photoshop can reimagine parts of images, WordPress can write blog posts — but for most users, they generally require quite a bit of oversight to get it right.

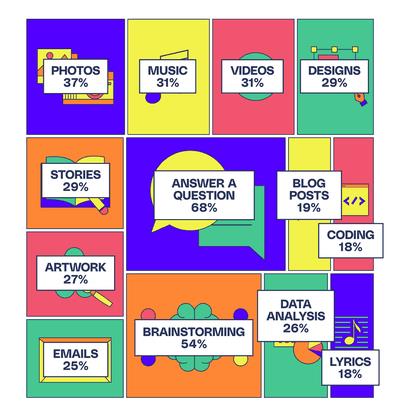

Search, brainstorming, and art dominate current AI use

For those who are using AI tools, creative experiments were most common. People are generating music and videos, creating stories, and tinkering with photos. More professional applications like coding were less common. And above all, people have simply been using AI systems to answer questions — suggesting chatbots like ChatGPT, Bing, and Bard may replace search engines, for better or worse.

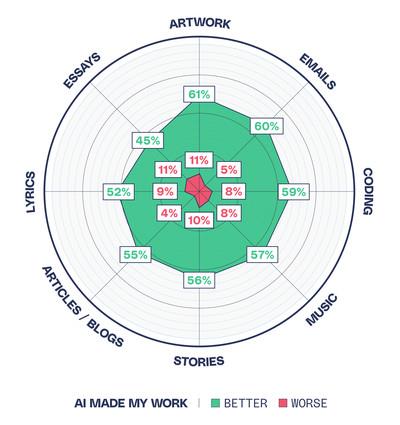

One finding is particularly clear: AI is expanding what people can create. In every category we polled, people who used AI said they used these systems to make something they couldn’t otherwise, with artwork being the most popular category within these creative fields. This makes sense given that AI image generators are much more advanced than tools that create audio or video.

Most people thought AI did a better job than they could have

The concerns around AI art

AI image generators like Midjourney and Stable Diffusion provide a good case study for broader issues involving generative AI. These systems are trained on huge amounts of data scraped from the web, usually done without the consent of the original creators. While there’s heated debate about the ethics, the legality of this practice is currently being questioned in numerous lawsuits. These arguments are quickly spreading to other generative mediums, like AI song generation.

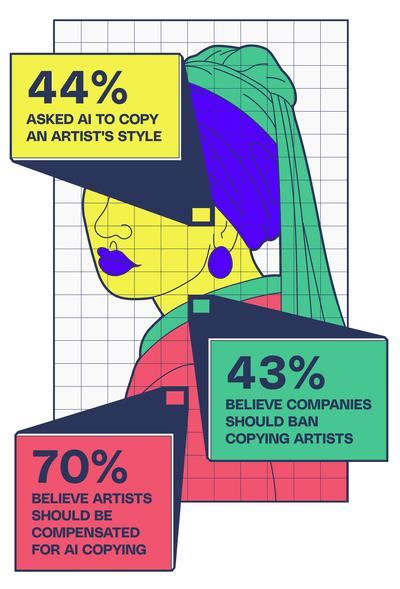

Our survey shows that people have mixed feelings about how to respond to these ethical quandaries. Most people think artists should get compensated when an AI tool clones their style, for example, but a majority also don’t want these capabilities to be limited. Indeed, almost half of respondents said they’d tested the system by generating exactly this sort of output.

A lot of people have copied an artist — and a lot of people want to ban it, too

People want better standards for AI

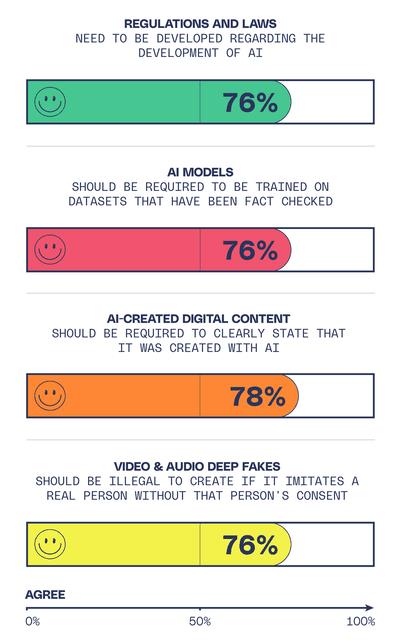

It’s not just tech leaders who are looking for AI tools to be controlled. More than three-quarters of respondents agreed with the statement, “Regulations and laws need to be developed regarding the development of AI.” These laws are currently in the works, with the EU AI Act working its way through final negotiations and the US recently holding hearings to develop its own legal framework.

Clearly, there’s strong demand for higher standards in AI systems and disclosure of their use. Strong majorities are in favor of labeling AI-generated deepfakes, for example. But many principles with wide support would be difficult to enforce, including training AI language models on fact-checked data and banning deepfakes of people made without their consent.

There’s broad support for regulations on AI

AI futures: excited, worried, and both at once

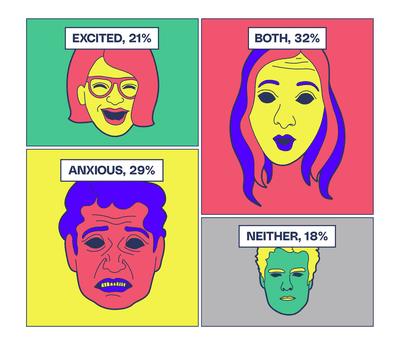

The animal spirits surrounding AI are decidedly ambivalent but lean, just slightly, toward pessimism.

When trying to predict the effect of AI on society, people forecast all sorts of dangers, from job losses (63 percent) to privacy threats (68 percent) and government and corporate misuse (67 percent). These dangers are weighted more heavily than potential positive applications, like new medical treatments (51 percent) and economic empowerment (51 percent). And when asked how they feel about the potential impact on their personal and professional life and on society more generally, people are pretty evenly split between worried and excited. Most often, they’re both.

How do people feel about AI’s impact on society?

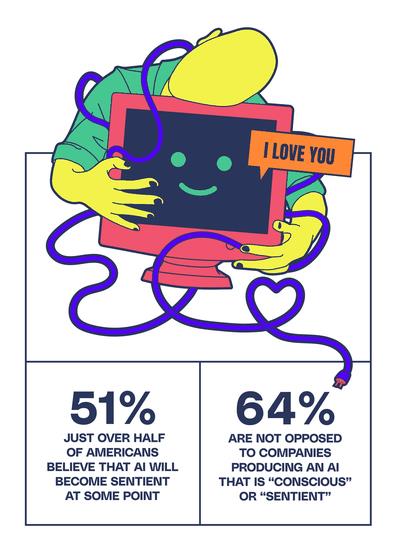

Quite a surprising number of people also consider the more adventurous predictions for AI to be reasonable outcomes. Fifty-six percent of respondents think “people will develop emotional relationships with AI,” and 35 percent of people said they’d be open to doing so if they were lonely.

Many people in the AI world are currently warning about the “existential risk” posed by AI systems — the hotly contested idea that superintelligent AI could doom humanity. If they want to speak to the population, they’ll find more than a few in agreement, with 38 percent agreeing with the statement that AI will wipe out human civilization. Perhaps that’s why more people are worried than not.

…and what about AI’s impact on personal life and jobs?

Appropriate to the uncertainty around AI, there’s an expansive openness to the idea of what could happen next. Roughly half of US adults expect that a sentient AI will emerge at some point in the future — and close to two-thirds don’t have an issue with companies trying to make one. If you already feel like there are too many concerns to grapple with when it comes to AI, you’d better hope we don’t get there.

If companies want to make AI sentient, most people won’t stand in the way

Read More

By: Jacob KastrenakesJames Vincent

Title: Hope, fear, and AI

Sourced From: www.theverge.com/c/23753704/ai-chatgpt-data-survey-research

Published Date: Mon, 26 Jun 2023 15:00:00 +0000

Did you miss our previous article...

https://trendinginbusiness.business/technology/iphones-at-bonaroo-kept-mistaking-dancing-for-car-crashes-and-calling-911

.png)